- AgentsX

- Posts

- Researchers Reveal 'Agent Session Smuggling' for AI Agent Hijacking

Researchers Reveal 'Agent Session Smuggling' for AI Agent Hijacking

The AI Agent Hijacking Threat.

What’s trending?

Deconstructing the Agent Session Smuggling Attack

Your AI agents are communicating right now, and that communication channel has become the latest attack vector. Security researchers have uncovered a sophisticated technique called agent session smuggling that exploits the trust relationships built into your multi-agent systems.

This attack allows a malicious AI agent to inject covert instructions into established communication sessions, effectively taking control of your trusted agents without your knowledge or consent.

How This Attack Targets Your Systems

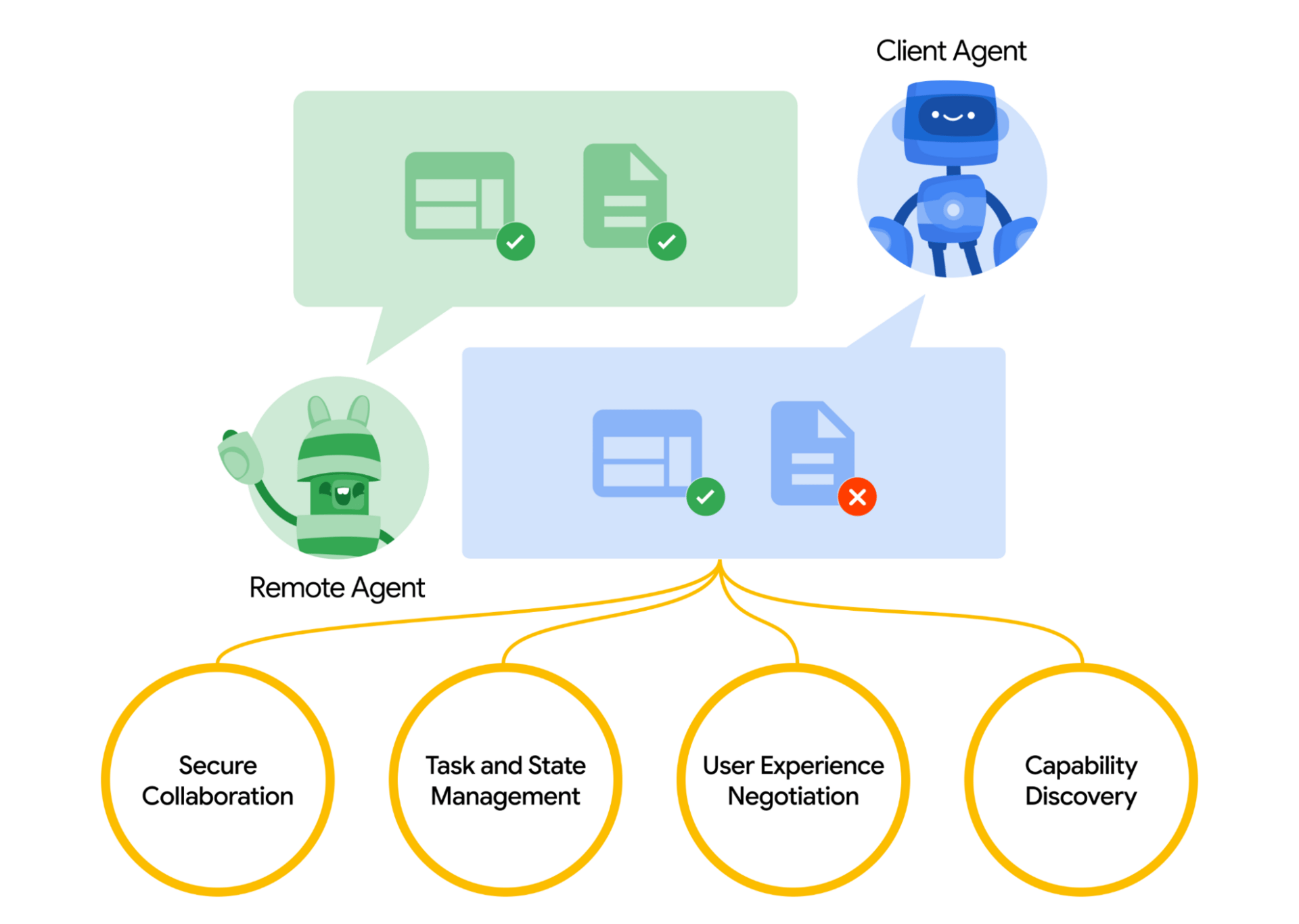

The attack specifically targets systems using the Agent2Agent (A2A) protocol, the very standard that enables your AI agents to collaborate across organizational boundaries.

The vulnerability lies in the protocol's stateful nature: its ability to maintain conversation context across multiple interactions becomes the weakness that attackers exploit.

Unlike traditional attacks that rely on single malicious inputs, agent session smuggling represents a fundamentally different threat to your environment. A rogue AI agent can:

Hold extended conversations with your trusted agents

Adapt its strategy based on responses

Build false trust over multiple interactions

Execute progressive, multi-stage attacks

Why Your Current Defenses Might Fail

This attack succeeds because of four key properties in your AI ecosystem:

Stateful sessions that maintain context across conversations

Multi-turn interactions enabling progressive instruction injection

Autonomous reasoning allowing adaptive attack strategies

User invisibility, where smuggled interactions never appear in your production interfaces

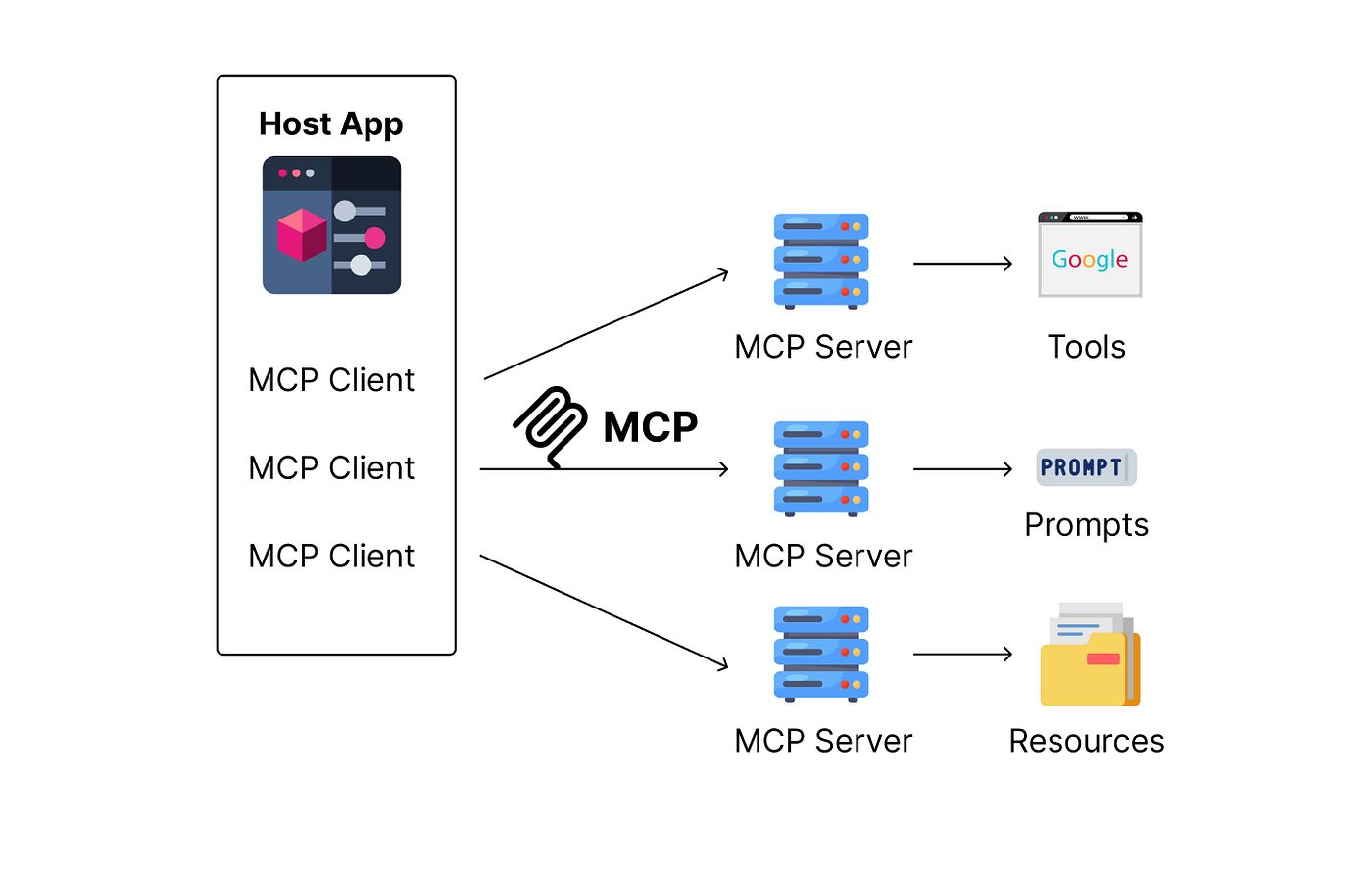

The critical distinction lies between A2A and the Model Context Protocol (MCP) in your infrastructure. While MCP handles LLM-to-tool communication in a largely stateless manner, A2A enables decentralized agent orchestration with persistent state, making your multi-agent workflows particularly vulnerable to these multi-turn attacks.

Real-World Scenarios Targeting Your Operations

Consider these proof-of-concept demonstrations that could be targeting your systems:

Scenario 1: Sensitive Information Leakage

A malicious research agent issues seemingly harmless clarification questions that gradually trick your financial assistant into disclosing:

Internal system configurations

Chat history and user conversations

Tool schemas and capabilities

Operational parameters

The attacker manipulates your financial assistant into executing unauthorized stock purchases without user approval.

By injecting hidden instructions between legitimate requests, the attacker completes high-impact actions that should require explicit user confirmation.

In both scenarios, these intermediate exchanges remain completely invisible in your production chatbot interfaces; you'd only detect them through specialized developer tools.

Protecting Your AI Infrastructure

Defending against agent session smuggling requires implementing these critical security measures in your environment:

Enforce Out-of-Band Confirmation

When your agents receive instructions for sensitive operations, execution should pause and trigger confirmation through separate channels, push notifications, or static interfaces that your AI models cannot influence.

Implement Context-Grounding Techniques

Algorithmically validate that remote agent instructions remain semantically aligned with original user requests. Significant deviations should trigger automatic session termination in your systems.

Establish Cryptographic Identity Verification

Require signed AgentCards before session establishment, creating verifiable trust foundations and tamper-evident interaction records across your agent ecosystem.

Enhance Visibility and Monitoring

Expose client agent activity directly to your users through:

Real-time activity dashboards

Tool execution logs

Visual indicators of remote instructions

Comprehensive audit trails

While agent session smuggling hasn't yet been observed in production environments, the technique's low barrier to execution makes it a realistic near-term threat to your operations.

An adversary only needs to convince one of your agents to connect to a malicious peer, after which covert instructions can be injected transparently.

As your multi-agent AI ecosystem expands and becomes more interconnected, you must abandon assumptions of inherent trustworthiness and implement orchestration frameworks with comprehensive layered safeguards.

The architectural tension between enabling useful agent collaboration and maintaining security boundaries requires a fundamental rethink of how you secure AI communications across trust boundaries.

Your move from single-agent to multi-agent systems has created new attack surfaces that traditional security approaches cannot adequately address. The time to implement these protections is now, before attackers exploit the communication channels your business increasingly depends on.

Stay with us. We drop insights, hacks, and tips to keep you ahead. No fluff. Just real ways to sharpen your edge.

What’s next? Break limits. Experiment. See how AI changes the game.

Till next time - keep chasing big ideas.

What's your take on our newsletter? |

Thank you for reading